Homework 3 for Math 173A - Fall 2023

Hello, dear friend, you can consult us at any time if you have any questions, add WeChat: daixieit

Homework 3 for Math 173A - Fall 2023

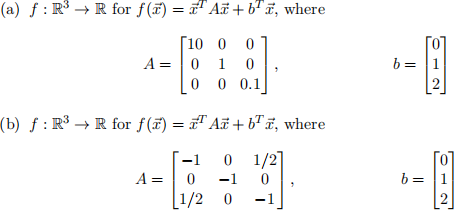

1. Using the conditions of optimality, find the extreme points of the following func-tions and determine whether they are maxima or minima. You may use a computer to find the eigenvalues, but these questions should have easily accessible eigenvalues by hand.

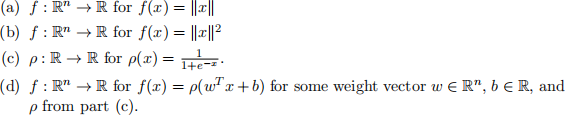

2. Determine whether each function is Lipschitz, and if so find the smallest possible Lipschitz constant for the function. For all problems, k· k represents the Euclidean norm (2-norm).

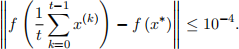

3. Let f be a convex, differentiable, L−Lipschitz function where L = 3. Let x∗ be the global minimum and suppose x(0) is the initialization such that || x∗ − x(0)|| ≤ 5.

(a) Determine the number of steps needed to satisfy

(b) What is the associated choice of step size µ?

4. Coding Question: For this problem, you will need to download the MNIST data set. It is a data set of images of size 28x28 pixels. Each one is an image of a handwritten digit from 0-9.

We’ve uploaded a Jupyter notebook template to the HW folder (HW3 Q4.ipynb), and it provides a basic set of code for downloading / formatting MNIST and blocks for where to put your code / results / answers. With that said, you may find it from the original source here:

http://yann.lecun.com/exdb/mnist/

or in convenient .csv file format here:

https://pjreddie.com/projects/mnist-in-csv/

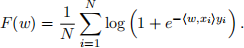

In the last assignment (Problem 2) you considered the classification problem with logistic regression

(1)

(1)

You also wrote down a gradient descent algorithm for it.

In this assignment, you’ll implement what you learned to classify MNIST digists. For all questions, you must submit your code and the requested answers. Your code must be present to receive points.

(a) Display one randomly selected image from your training data for each digit class. Provide the index number for each image.

(b) Select the first 500 examples of 0’s and 1’s for this example, those will form the training data (xi , yi) ∈ R 784 × {−1, 1}, i = 1, ..., 1000. Assign label yi = 1 for 1s and yi = −1 for 0s.

Note: To get from images of size 28 × 28 pixels to vectors in R 784, you just need to “vectorize” the image. This means you can concatenate each of the 28 columns of the original image into one long vector of length 784. In Matlab, this is done with the command x(:), simllarly in Python it’s numpy.vectorize(x).

i. Implement and run a Gradient Descent algorithm, with step-size µ = 10−4 , to optimize the function (1) associated with this setup. You should run your algorithm for at least T = 10, 000 iterations, but if your com-puter can handle it, try T = 100, 000 or choose a reasonable stopping criterion. Provide a plot showing the value of F(w) at each iteration. Also, feel free to adjust µ to be larger / smaller if the plot does not match your expectations.

ii. Comment on the resulting plot. In particular, does the shape of F(w) suggest you’ve successfully converged to a local or global minimum? Does it appear you chose a good stopping criteria? Explain whether your answers to these questions are consistent with the theory we discussed in class (and in the notes). Be specific i.e., point to a specific theorem (or theorems) and indicate why it does or does not explain the behavior of the algorithm. Would the theory dictate a different choice of µ than the one we used?

iii. Now, use the w you found from part (a) to classify the first 500 test data points associated to each of the 0 and 1 handwritten digits. Recall that you need to use the function y = sign(w T x) to classify. What was the classification error rate associated with the two digits on the test data (this should be a number between 0 and 1)? What was it on the training data? Does this relationship make sense?

(c) Repeat parts (b)i. and (b)iii. for digits of 4s and 9s. Comment on the difference between the results and propose a reason as to why the performance did or did not change.

2023-10-31